|

|

|---|

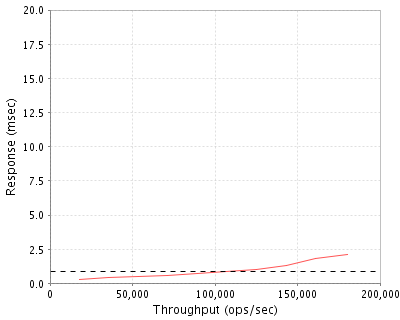

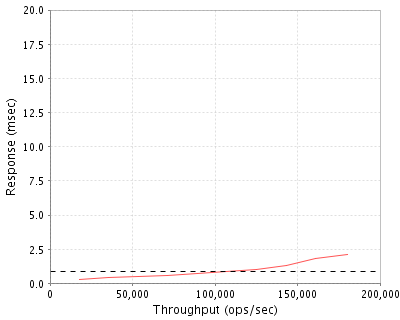

| Avere Systems, Inc. | : | FXT 3800 (3 Node Cluster, FlashCloud for Amazon S3 Service) |

| SPECsfs2008_nfs.v3 | = | 180141 Ops/Sec (Overall Response Time = 0.86 msec) |

|

|

|---|

| Tested By | Avere Systems, Inc. |

|---|---|

| Product Name | FXT 3800 (3 Node Cluster, FlashCloud for Amazon S3 Service) |

| Hardware Available | July 2013 |

| Software Available | March 2014 |

| Date Tested | March 12 2014 |

| SFS License Number | 9020 |

| Licensee Locations |

Pittsburgh, PA USA |

The Avere Systems FXT 3800 Edge filer running AvereOS V3.2 with FlashCloud™ for Amazon S3 provides Cloud NAS storage that enables performance scalability at the edge while leveraging object-based Cloud storage services at the core. Amazon's Simple Storage Service (S3) eliminates the need for owning, provisioning and managing storage, by providing unlimited storage while only paying for actual capacaity used. The AvereOS Hybrid NAS software dynamically organizes hot data into RAM, SSD and SAS tiers, retaining active data on the FXT Edge filer, and placing inactive data on the object-based Amazon Simple Storage Service (S3). The FXT Edge filer managed by AvereOS software provides a global namespace, clusters to scale out to as many as 50 nodes, supports millions of I/O operations per second, and delivers over 100 GB/s of I/O bandwidth. The FXT 3800 is built on a 64-bit architecture that provides sub-millisecond responses to NFSv3 and CIFS client requests consisting of read, write, and directory/metadata update operations. The AvereOS FlashCloud functionality enables the Avere Edge filer to immediately acknowledge all filesystem requests for any data contained in the Edge filer's clustered Tiered File System, and asynchronously flush inactive user and directory data to the Amazon S3 cloud storage service when the writeback timer expires. The tested Edge filer configuration consisted of (3) FXT 3800 nodes backed by the Amazon Simple Storage Service (S3). The cloud storage service included a subscription to Amazon Web Services US-West-2 Simple Storage Service (S3) to store data, and also included a subscription to Amazon Web Services Direct Connect network service providing private 1Gbps connectivity from the Avere Edge filer cluster directly to the AWS S3-US-West-2 region.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 3 | Storage Appliance | Avere Systems, Inc. | FXT 3800 | Avere Systems Cloud NAS Edge filer running AvereOS V3.2 software with FlashCloud for Amazon S3. Includes (13) 600 GB SAS Disks and (2) 400GB SSD Drives. |

| OS Name and Version | AvereOS V3.2 |

|---|---|

| Other Software | None |

| Filesystem Software | AvereOS V3.2 |

| Name | Value | Description |

|---|---|---|

| Writeback Time | 12 hours | Files may be modified up to 12 hours before being written back to Amazon Simple Storage Service (Amazon S3). |

| buf.autoTune | 0 | Statically size FXT memory caches. |

| buf.InitialBalance.smallFilePercent | 57 | Tune smallFile buffer to use 57 percent of memory pages. |

| buf.InitialBalance.largeFilePercent | 10 | Tune largeFile buffers to use 10 percent of memory pages. |

| cfs.randomWindowSize | 32 | Increase the size of random IOs from disk. |

| cluster.dirMgrConnMult | 12 | Multiplex directory manager network connections. |

| dirmgrSettings.unflushedFDLRThresholdToStartFlushing | 40000000 | Increase the directory log size. |

| dirmgrSettings.maxNumFdlrsPerNode | 120000000 | Increase the directory log size. |

| dirmgrSettings.maxNumFdlrsPerLog | 1500000 | Increase the directory log size. |

| dirmgrSettings.balanceAlgorithm | 1 | Balance directory manager objects across the cluster. |

| tokenmgrs.geoXYZ.fcrTokenSupported | no | Disable the use of full control read tokens. |

| tokenmgrs.geoXYZ.trackContentionOn | no | Disable token contention detection. |

| tokenmgrs.geoXYZ.lruTokenThreshold | 15400000 | Set a threshold for token recycling. |

| tokenmgrs.geoXYZ.maxTokenThreshold | 15500000 | Set maximum token count. |

| vcm.readdir_readahead_mask | 0x3000 | Optimize readdir performance. |

| vcm.disableAgressiveFhpRecycle | 1 | Disable optimistic filehandle recycling. |

| vcm.readdirInvokesReaddirplus | 0 | Disable optimistic trigger of client readdir calls to readdirplus fetches. |

| initcfg:cfs.num_inodes | 28000000 | Increase in-memory inode structures. |

| initcfg:vcm.fh_cache_entries | 16000000 | Increase in-memory filehandle cache structures. |

| initcfg:vcm.name_cache_entries | 18000000 | Increase in-memory name cache structures. |

None

| Description | Number of Disks | Usable Size |

|---|---|---|

| Each FXT 3800 node contains (13) 600 GB 10K RPM SAS disks. All FXT data resides on these disks. | 39 | 21.3 TB |

| Each FXT 3800 node contains (2) 400 GB eMLC SSD disks. All FXT data resides on these disks. | 6 | 2.2 TB |

| Each FXT 3800 node contains (1) 250 GB SATA disk. System disk. | 3 | 698.9 GB |

| Total | 48 | 24.1 TB |

| Number of Filesystems | 1 |

|---|---|

| Total Exported Capacity | 22TB |

| Filesystem Type | FlashCloud for Amazon S3 Tiered File System |

| Filesystem Creation Options |

AvereAPI command: corefiler.createCloudFiler('AmazonS3',{'cloudType': 's3', 'bucket': 'behn-cloudboi', 'serverName': 'behn-cloudboi.s3-us-west-2.amazonaws.com', 'cloudCredential':AWScred', 'cryptoMode': 'CBC-AES-256-HMAC-SHA-512', 'exportSize':'24189255811072'}) |

| Filesystem Config | Single file system exported via global name space. |

| Fileset Size | 20862.2 GB |

| Item No | Network Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 10 Gigabit Ethernet | 3 | One 10 Gigabit Ethernet port used for each FXT 3800 Edge filer appliance. |

| 2 | 1 Gigabit Ethernet | 1 | Single 1 Gigabit Ethernet port used to interconnect the AWS Direct Connect service with the Avere Edge filer cluster. |

Each FXT 3800 was attached via a single 10 GbE port to one Gnodal GS7200 72 port 10 GbE switch. The load generator client was attached to the same switch via 10 GbE interface. The Amazon Simple Storage Service (S3) was connected via a private Amazon Web Services Direct Connect 1Gbps network link. A 1500 byte MTU was used on the network connecting the load generator to the system under test.

An MTU size of 1500 was set for all connections to the switch. The load generator was connected to the network via a single 10 GbE port. The SUT was configured with 3 separate IP addresses on one subnet. Each Avere Edge filer node was connected via a 10 GbE NIC and was sponsoring 1 IP address.

| Item No | Qty | Type | Description | Processing Function |

|---|---|---|---|---|

| 1 | 6 | CPU | Intel Xeon CPU E5645 2.40 GHz Hex-Core Processor | FXT 3800 AvereOS, Network, NFS/CIFS, Filesystem, Device Drivers |

Each Avere Edge filer node has two physical processors.

| Description | Size in GB | Number of Instances | Total GB | Nonvolatile |

|---|---|---|---|---|

| FXT 3800 System Memory | 144 | 3 | 432 | V |

| FXT 3800 NVRAM | 2 | 3 | 6 | NV |

| Grand Total Memory Gigabytes | 438 |

Each FXT node has main memory that is used for the operating system and for caching filesystem data. Each FXT contains two (2) super-capacitor-backed NVRAM modules used to provide stable storage for writes that have not yet been written to disk.

The Avere filesystem logs writes and metadata updates to the NVRAM module. Filesystem modifying NFS operations are not acknowledged until the data has been safely stored in NVRAM. The super-capacitor backing the NVRAM ensures that any uncommitted transactions are committed to persistent flash memory on the NVRAM card in the event of power loss.

The system under test consisted of (3) Avere FXT 3800 nodes. Each node was attached to the network via 10 Gigabit Ethernet. Each FXT 3800 node contains (13) 600 GB SAS disks and (2) 400GB eMLC SSD drives. The object-based Cloud Core filer storage system was attached to the network via Amazon Web Services Direct Connect 1Gbps private link. The 22TB exported capacity configuration ensures that there is no required communication with the Cloud Core filer during the period of the benchmark run. The storage and processing components of the Amazon S3 Service are exempt from this listing as stated by the Run Rules.

N/A

| Item No | Qty | Vendor | Model/Name | Description |

|---|---|---|---|---|

| 1 | 1 | Supermicro | SYS-1026T-6RFT+ | Supermicro Server with 48GB of RAM running CentOS 6.4 (Linux 2.6.32-358.0.1.el6.x86_64) |

| 2 | 1 | Gnodal | GS7200 | Gnodal 72 Port 10 GbE Switch. 72 SFP/SFP+ ports |

| LG Type Name | LG1 |

|---|---|

| BOM Item # | 1 |

| Processor Name | Intel Xeon E5645 2.40GHz Hex-Core Processor |

| Processor Speed | 2.40 GHz |

| Number of Processors (chips) | 2 |

| Number of Cores/Chip | 6 |

| Memory Size | 48 GB |

| Operating System | CentOS 6.4 (Linux 2.6.32-358.0.1.el6.x86_64) |

| Network Type | Intel Corporation 82599EB 10-Gigabit SFI/SFP+ |

| Network Attached Storage Type | NFS V3 |

|---|---|

| Number of Load Generators | 1 |

| Number of Processes per LG | 768 |

| Biod Max Read Setting | 2 |

| Biod Max Write Setting | 2 |

| Block Size | 0 |

| LG No | LG Type | Network | Target Filesystems | Notes |

|---|---|---|---|---|

| 1..1 | LG1 | 1 | /AmazonS3 | LG1 node is connected to the same Gnodal GS700 network switch. |

All clients were mounted against the single filesystem on all FXT nodes.

Each load-generating client hosted 768 processes. The assignment of 768 processes to 3 network interfaces was done such that they were evenly divided across all network paths to the FXT appliances. The filesystem data was evenly distributed across all disks and Avere Edge filer FXT appliances.

N/A

Generated on Mon Apr 07 08:54:57 2014 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 07-Apr-2014