SPEC SFS®2014_swbuild Result

Copyright © 2016-2019 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_swbuild ResultCopyright © 2016-2019 Standard Performance Evaluation Corporation |

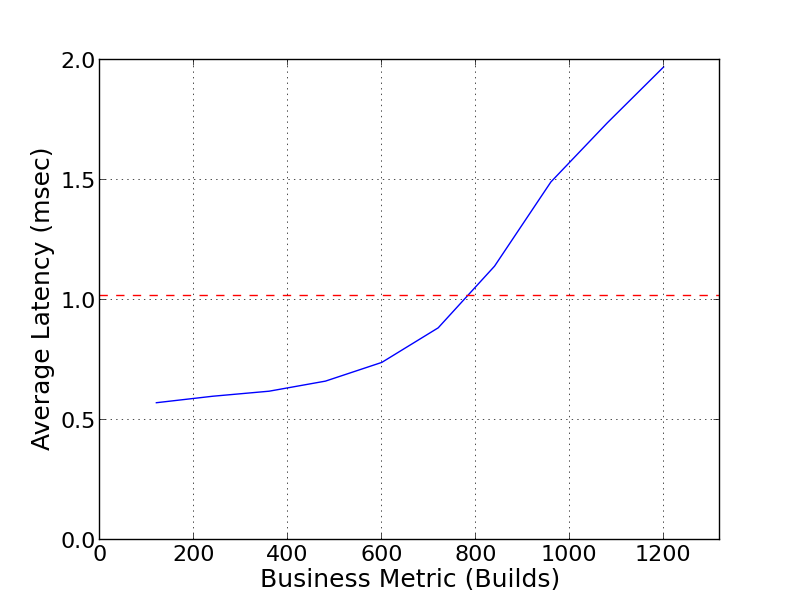

| WekaIO | SPEC SFS2014_swbuild = 1200 Builds |

|---|---|

| WekaIO Matrix 3.1 with Supermicro BigTwin Servers | Overall Response Time = 1.02 msec |

|

|

| WekaIO Matrix 3.1 with Supermicro BigTwin Servers | |

|---|---|

| Tested by | WekaIO | Hardware Available | July 2017 | Software Available | November 2017 | Date Tested | February 2018 | License Number | 4553 | Licensee Locations | San Jose, California |

WekaIO Matrix is a flash native parallel and distributed, scale out file system designed to solve the challenges of the most demanding workloads, including AI and machine learning, genomic sequencing, real-time analytics, media rendering, EDA, software development and technical compute. Matrix software allows managing and dynamically scaling data stores up to 100s of PB in size as a single name space, globally shared, POSIX compliant file system that delivers industry leading performance and scale at a fraction of traditional storage products price. The software can be deployed as a dedicated storage appliance or in a hyperconverged mode with zero additional storage footprint and can be used on-premises as well as in the public cloud. WekaIO Matrix is a software only solution that runs on any standard X.86 hardware infrastructure delivering huge savings compared to proprietary all-flash based appliances. This test platform is a dedicated storage implementation on Supermicro BigTwin servers.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 1 | Parallel File System | WekaIO | Matrix Software V3.1 | WekaIO Matrix is a parallel and distributed POSIX file system that scales across compute nodes and distributes data and metadata across the nodes for parallel access. |

| 2 | 4 | Storage Server Chassis | Supermicro | SYS-2029BT-HNR | Supermicro BigTwin chassis, each with 4 nodes per 2U chassis, populated with 4 NVMe drives per node. |

| 3 | 64 | 1.2TB U2 NVMe SSD | Micron | MTFDHAL1T2MCF | Micron 9100 U.2 NVMe Enterprise Class Drives. |

| 4 | 32 | Processor | Intel | BX806735122 | Intel Xeon Gold 5122 4C 3.6GHz Processor |

| 5 | 16 | Network Interface Card | Mellanox | MCX456A-ECAT | 100Gbit ConnectX-4 Ethernet dual port PCI-E adapters, one per node. |

| 6 | 192 | DIMM | Supermicro | DIMM 1892mb 2667MHz SRx4 ECC | System Memory DDR4 2667MHz ECC |

| 7 | 16 | Boot Drive | Supermicro | MEM-IDSAVM8-128G | SATA DOM Boot Drive, 128G |

| 8 | 16 | Network Interface Card | Intel | AOC-MGP-I2M-O | 2 Port Intel i350 1GbE RJ45 SIOM |

| 9 | 16 | BIOS Module | Supermicro | SFT-OOB-LIC | Out of Band Firmware Management BIOS-Flash |

| 10 | 1 | Switch | Mellanox | MSN2700-CS2FC | 32-port 100GbE Switch |

| 11 | 11 | Clients | AIC | HP-201-AD | AIC chassis, each with 4 servers per 2U chassis. Each server had 2 Intel(R) Xeon(R) E5-2640 v4, CPUs and 128GB memory. A total of 11 of the 12 available servers were used in the testing. |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Storage Node | MatrixFS File System | 3.1 | WekaIO Matrix is a distributed and parallel POSIX file system that runs on any NVMe, SAS or SATA enabled commodity server or cloud compute instance and forms a single storage cluster. The file system presents a POSIX compliant, high performance, scalable global namespace to the applications. |

| 2 | Storage Node | Operating System | CENTOS 7.3 | The operating system on each storage node was 64-bit CENTOS Version 7.3. |

| 3 | Client | Operating System | CENTOS 7.3 | The operating system on the load generator client was 64-bit CENTOS Version 7.3. |

| 4 | Client | MatrixFS Client | 3.1 | MatrixFS Client software is mounted on the load generator clients and presents a POSIX compliant file system |

| Storage Node | Parameter Name | Value | Description |

|---|---|---|

| SR-IOV | Enabled | Enables CPU virtualization technology |

SR-IOV was enabled in the node BIOS. Hyper threading was disabled. No additional hardware tuning was required.

| Storage Node | Parameter Name | Value | Description |

|---|---|---|

| Jumbo Frames | 4190 | Enables up to 4190 bytes of Ethernet Frames | Client | Parameter Name | Value | Description |

| WriteAmplificationOptimizationLevel | 0 | Write amplification Optimization level |

The MTU is required and valid for all environments and workloads.

Not applicable

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | 1.2TB U.2 Micron 9100 Pro NVMe SSD in the Supermicro BigTwin node | 14+2 | Yes | 64 |

| 2 | 128G SATA DOM in the Supermicro BigTwin node to store OS | Yes | 16 |

| Number of Filesystems | 1 | Total Capacity | 48.8 TiB | Filesystem Type | MatrixFS |

|---|

A single WekaIO Matrix file system was created and distributed evenly across all 64 NVMe drives in the cluster (16 storage nodes x 4 drives/node). Data was protected to a 14+2 failure level.

WekaIO MatrixFS was created and distributed evenly across all 16 storage nodes in the cluster. The deployment model is as a dedicated server protected with Matrix Distributed Data Coding schema of 14+2. All data and metadata is distributed evenly across the 16 storage nodes.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 100GbE NIC | 16 | The solution used a total of 16 100GbE ports from the storage nodes to the network switch. |

| 2 | 50GbE NIC | 11 | The solution used a total of 11 50GbE ports from the clients to the network switch. |

The solution under test utilized 16 100Gbit Ethernet ports from the storage nodes to the network switch. The clients utilized 11 50GbE connections to the network switch.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | Mellanox MSN 2700 | 100Gb Ethernet | 32 | 27 | Switch has Jumbo Frames enabled |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 32 | CPU | Supermicro BigTwin node | Intel(R) Xeon(R) Gold 5122, 3.6Ghz, 4 core CPU | WekaIO MatrixFS, Data Protection, device driver |

| 2 | 22 | CPU | AIC HP201-AD | Intel(R) Xeon(R) E5-2640 v4, 2.4Ghz, 10 core CPU | WekaIO MatrixFS client |

Each storage node has 2 processors, each processor has 4 cores at 3.6Ghz. Each client has 2 processors, each processor has 10 cores. WekaIO Matrix utilized 8 of the 20 available cores on the client to run Matrix functions. The Intel Spectre and Meltdown patches were not applied to any element of the SUT, including the processors.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| Storage node memory | 96 | 16 | V | 1536 |

| Client node memory | 128 | 11 | V | 1408 | Grand Total Memory Gibibytes | 2944 |

Each storage node has 96GBytes of memory for a total of 1,536GBytes. Each client has 128GBytes of memory, Matrix software utilized 20GBytes of memory per node.

WekaIO does not use any internal memory to temporarily cache write data to the underlying storage system. All writes are committed directly to the storage disk, therefore there is no need for any RAM battery protection. Data is protected on the storage media using WekaIO Matrix Distributed Data Protection (14+2). In the event of a power failure a write in transit would not be acknowledged.

The solution under test was a standard WekaIO Matrix enabled cluster in dedicated server mode. The solution will handle both large file I/O as well as small file random I/O and metadata intensive applications. No specialized tuning is required for different or mixed use workloads.

None

3 x AIC HP201-AD storage Chassis (11 clients) were used to generate the benchmark workload. Each client had 1 x 50GbE network connection to a Mellanox MSN 2700 switch. 4 x Supermicro BigTwin 2029BT-HNR storage chassis (16 nodes) were benchmarked. Each storage node had 1 x 100GbE network connection to the same Mellanox MSN 2700 switch. The clients (AIC) had the MatrixFS native NVMe POSIX Client mounted and had direct and parallel access to all 16 storage nodes.

None

None

Generated on Wed Mar 13 16:41:47 2019 by SpecReport

Copyright © 2016-2019 Standard Performance Evaluation Corporation