SPECsfs2008_nfs.v3 Result

|

Oracle Corporation

|

:

|

Oracle ZFS Storage ZS3-4

|

|

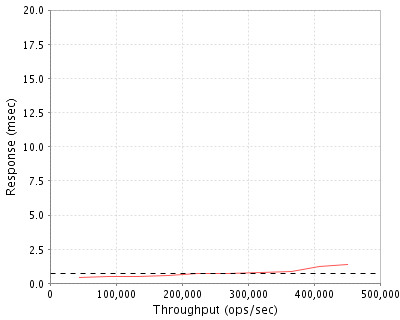

SPECsfs2008_nfs.v3

|

=

|

450702 Ops/Sec (Overall Response Time = 0.70 msec)

|

Performance

Throughput

(ops/sec)

|

Response

(msec)

|

|

44560

|

0.4

|

|

89370

|

0.5

|

|

134129

|

0.5

|

|

179075

|

0.6

|

|

224055

|

0.7

|

|

269180

|

0.7

|

|

319852

|

0.8

|

|

366649

|

0.9

|

|

407969

|

1.2

|

|

450702

|

1.4

|

|

|

Product and Test Information

|

Tested By

|

Oracle Corporation

|

|

Product Name

|

Oracle ZFS Storage ZS3-4

|

|

Hardware Available

|

September 2013

|

|

Software Available

|

September 2013

|

|

Date Tested

|

May 2013

|

|

SFS License Number

|

6

|

|

Licensee Locations

|

Broomfield, CO USA

|

The Oracle ZFS Storage ZS3-4 is a high-performance storage system that offers enterprise-class NAS and SAN capabilities with industry-leading Oracle Database integration, in a cost-effective high-availability configuration. The Oracle ZFS Storage ZS3-4 offers simplified set up and management combined with industry-leading storage analytics and a performance-optimized platform that uses specialized Read and Write Flash-biased SSD caching devices. The Oracle ZFS Storage ZS3-4 can scale to 2 TB Memory, 80 CPU cores, and 3.4 PB capacity, with up to 12.8 TB of Flash Cache in a high-availability configuration. Oracle ZFS Storage Appliances deliver additional economic value bundled data services such as file- and block-level protocols including connectivity over InfiniBand, Compression, Deduplication, Thin provisioning, DTrace Analytics, Virus Scan, Snapshots, Triple Mirror, Triple Parity RAID, Phone-home, NDMP, Clustering, etc.

Configuration Bill of Materials

|

Item No

|

Qty

|

Type

|

Vendor

|

Model/Name

|

Description

|

|

1

|

2

|

Storage Controller

|

Oracle

|

Oracle ZFS Storage ZS3-4: controller part# 7105725

|

Oracle ZFS Storage ZS3-4: controller

|

|

2

|

64

|

Memory

|

Oracle

|

16 GB DDR3-1066 DIMMs part# 7105053(Qty:2)

|

16 GB DDR3-1066 DIMMs (for factory installation).Ordered in Quantity of 2

|

|

3

|

4

|

10 Gigabit Ethernet Adapter

|

Oracle

|

Sun Dual 10GbE SFP+ PCIe 2.0 Low Profile adapter part# 1109A-Z

|

Sun Dual 10GbE SFP+ PCIe 2.0 Low Profile adapter incorporating Intel 82599 10 Gigabit Ethernet controller and supporting pluggable SFP+ Transceivers. ROHS-5. ATO option

|

|

4

|

8

|

Short Wave Pluggable Transceiver

|

Oracle

|

10Gbps Short Wave Pluggable Transceiver (SFP+) part# 2129A

|

Dual rate transceiver: SFP+ SR. Support 1 Gb/sec and 10 Gb/sec dual rate (for factory installation)

|

|

5

|

20

|

Storage Drive Enclosure

|

Oracle

|

Sun Disk Shelf SAS-2 part# DS2-0BASE

|

Sun disk shelf: base chassis with 2 SAS-2 I/O modules, 2 AC PSUs and 2 cooling fans (for factory installation)

|

|

6

|

472

|

Disk Drives

|

Oracle

|

Disk Drives 300GB 15K RPM SAS-2 HDD part# 7101274

|

SAS-2 Disk Drives 300GB 15K RPM HDD

|

|

7

|

8

|

SSD Drives

|

Oracle

|

SAS-2 73GB 3.5-inch SSD Write Flash Accelerator part# 7101197

|

SLC SAS-2 SSD 3.5-inch write flash accelerator with stingray bracket (for factory installation)

|

|

8

|

4

|

SAS-2 Host Bus Adapter

|

Oracle

|

SAS-2 16-Port 6Gbps HBA part# 7103790

|

SAS PCIE 6Gbs 16 port (for factory installation)

|

Server Software

|

OS Name and Version

|

Oracle ZFS Storage OS8

|

|

Other Software

|

None

|

|

Filesystem Software

|

ZFS

|

Server Tuning

|

Name

|

Value

|

Description

|

|

arc_shrink_shift

|

5

|

Control of arc shrink.

|

Server Tuning Notes

Download My Oracle Support document # 1577276.1 and apply included workflow. (Sets arc_shrink_shift to 5.)

Disks and Filesystems

|

Description

|

Number of Disks

|

Usable Size

|

|

300GB SAS 15K RPM Disk Drives

|

472

|

62.3 TB

|

|

SSD 73GB SAS-2 Write Flash Accelerator

|

8

|

584.0 GB

|

|

500GB SATA 7.2K RPM Disk Drives Each controller contains 2 of these

disk drives and are mirrored and are not used for cache data or data storage.

|

4

|

899.0 GB

|

|

Total

|

484

|

63.8 TB

|

|

Number of Filesystems

|

216

|

|

Total Exported Capacity

|

60.80 TB

|

|

Filesystem Type

|

ZFS

|

|

Filesystem Creation Options

|

default

|

|

Filesystem Config

|

216 ZFS file systems

|

|

Fileset Size

|

52155.8 GB

|

Both controllers are setup with 8 pools total (4 pools per controller). Each of the controller's pools are configured with

56 disk drives, 1 write flash accelerator and 3 spare disk drives. The pools

are also set up to mirror the data (RAID1) across all 56 drives.

The write flash accelerator in each pool is used for the ZFS Intent Log (ZIL).

All pools are configured with 27 ZFS file systems each. Since each controller

is configured with 4 pools and each pool contains 27 ZFS file system, in total

each controller has 108 ZFS file systems. Both of the controllers together in total have 216 ZFS file systems.

There are 2 internal mirrored system disk drives per

controller and are used only for the controllers core operating system.

These drives are not used for data cache or storing user data.

Network Configuration

|

Item No

|

Network Type

|

Number of Ports Used

|

Notes

|

|

1

|

10 Gigabit Ethernet

|

4

|

Each controller has 2 Dual port 10 Gigabit Ethernet

cards using only a single port on each and using Jumbo Frames

|

Network Configuration Notes

There are 8 ports total but 4 are active

at a time for high availibility. The MTU size is set to 9000 on each of the 10

Gigabit ports.

Benchmark Network

Each controller was configured to use a single port of two dual port

10GbE network adapters for the benchmark network. All of the 10GbE network

ports on all the load generators systems were connected to the Arista

7124SX switch that provided connectivity.

Processing Elements

|

Item No

|

Qty

|

Type

|

Description

|

Processing Function

|

|

1

|

8

|

CPU

|

2.4GHz 10-core Intel Xeon(tm) Processor E7-4870

|

NFS, ZFS, TCP/IP, RAID/Storage Drivers

|

Processing Element Notes

Each Oracle ZFS Storage ZS3-4 controller contains 4 physical processors,

each with 10 processing cores.

Memory

|

Description

|

Size in GB

|

Number of Instances

|

Total GB

|

Nonvolatile

|

|

Oracle ZFS Storage ZS3-4 controller memory

|

1024

|

2

|

2048

|

V

|

|

Grand Total Memory Gigabytes

|

|

|

2048

|

|

Memory Notes

The Oracle ZFS Storage ZS3-4 controllers' main memory is used for the

Adaptive Replacement Cache (ARC) the data cache and operating system memory.

Stable Storage

The Stable Storage requirement is guaranteed by the ZFS Intent Log

(ZIL) which logs writes and other file system changing transactions to either a

write flash accelerator or a disk drive. Writes and other file system changing

transactions are not acknowledged until the data is written to stable storage.

Since this is an active-active cluster high availability system, in the event of

a controller failing or power loss, the other active controller can take over

for the failed controller. Since the write flash accelerators or disk drives are

located in the disk shelves and can be accessed via the 8 backend SAS channels

from both controllers, the remaining active controller can complete any

outstanding transactions using the ZIL. In the event of power loss to both controllers, the ZIL is used after power is restored to reinstate any writes and other file system changes.

System Under Test Configuration Notes

The system under test is a Oracle ZFS Storage ZS3-4

cluster set up in an active-active configuration.

Other System Notes

Test Environment Bill of Materials

|

Item No

|

Qty

|

Vendor

|

Model/Name

|

Description

|

|

1

|

2

|

Oracle

|

Sun Fire X4270 M2

|

Sun Fire X4270 M2 with 144GB RAM and Oracle Solaris 11.1

|

|

2

|

1

|

Arista

|

7124SX

|

Arista 7124SX 24-port 10Gb Ethernet switch

|

Load Generators

|

LG Type Name

|

LG1

|

|

BOM Item #

|

1

|

|

Processor Name

|

Intel Xeon(tm) X5680

|

|

Processor Speed

|

3.3 GHz

|

|

Number of Processors (chips)

|

2

|

|

Number of Cores/Chip

|

6

|

|

Memory Size

|

144 GB

|

|

Operating System

|

Oracle Solaris 11.1

|

|

Network Type

|

10Gb Ethernet

|

Load Generator (LG) Configuration

Benchmark Parameters

|

Network Attached Storage Type

|

NFS V3

|

|

Number of Load Generators

|

2

|

|

Number of Processes per LG

|

432

|

|

Biod Max Read Setting

|

2

|

|

Biod Max Write Setting

|

2

|

|

Block Size

|

64

|

Testbed Configuration

|

LG No

|

LG Type

|

Network

|

Target Filesystems

|

Notes

|

|

1..2

|

LG1

|

1

|

/export/sfs-1../export/sfs-216

|

None

|

Load Generator Configuration Notes

File systems were mounted on all clients and all were

connected to the same physical and logical network.

Uniform Access Rule Compliance

Every client used 432 processes. All 216 file systems are mounted and accessed by each client and evenly divided amongst all network paths to the Oracle ZFS Storage ZS3-4 controllers. The file systems data was evenly distributed on

the backend.

Other Notes

Config Diagrams

Generated on Mon Sep 09 18:45:30 2013 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 09-Sep-2013