SPEC SFS®2014_vda Result

Copyright © 2016-2021 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_vda ResultCopyright © 2016-2021 Standard Performance Evaluation Corporation |

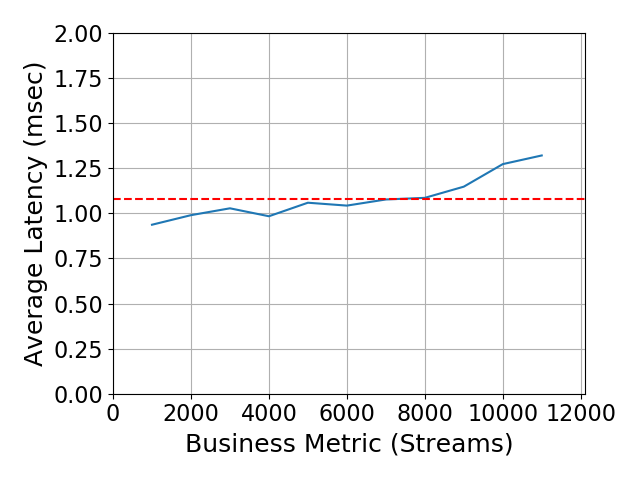

| ELEMENTS - syslink GmbH | SPEC SFS2014_vda = 11000 Streams |

|---|---|

| ELEMENTS BOLT w. BeeGFS 7.2.3 - VDA Benchmark Results | Overall Response Time = 1.08 msec |

|

|

| ELEMENTS BOLT w. BeeGFS 7.2.3 - VDA Benchmark Results | |

|---|---|

| Tested by | ELEMENTS - syslink GmbH | Hardware Available | May 2021 | Software Available | May 2021 | Date Tested | 8th August 2021 | License Number | 6311 | Licensee Locations | Duesseldorf, Germany |

ELEMENTS BOLT with the BeeGFS filesystem is an all-NVMe storage solution designed for media entertainment workflows that provides unmatched performance while being future proof due to its open architecture and seamless integration in on-premise, cloud or hybrid media workflows. It can scale in terms of capacity and performance, providing best-in-class throughput and latency at a very small operational footprint. ELEMENTS unique set of media centric workflow features (like the integrated automation engine and web-based asset management) extends its capabilities far beyond that of common IT storage products.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 12 | Storage Node | ELEMENTS | ELEMENTS BOLT | 2U server, all-NVMe storage node with BeeGFS Filesystem, scalable in capacity and performance. Each BOLT is half-populated with 12 NVMe devices (Micron 9300), 192GB RAM, 2x Single Port Mellanox Connect-X5 100GbE HBA (MCX515A-CCAT), 1x Dual Port 1Gbit Intel HBA, 1x 100Gbit link from the Mellanox HBA to the switch fabric for storage RDMA traffic. 2x Micron 5300 960GB SSDs for OS / boot. |

| 2 | 20 | Client Node | ELEMENTS | ELEMENTS Gateway | 2U server with 32GB of RAM and Intel XEON Silver CPU with 3.2 GHz. 2x Single Port Mellanox 100GbE HBA (MCX515A-CCAT) with a single 50Gbit connection to the switch fabric. 2x Micron 5300 480GB SSDs for OS / boot. |

| 3 | 1 | Prime Client Node | ELEMENTS | ELEMENTS Worker Node | 2U server with 32GB of RAM and AMD Threadripper CPU with 3.9 GHz. 1x Dual Port Mellanox 100GbE HBA (MCX516A-CCAT) with a single 50Gbit connection to the switch fabric. 2x Micron 5300 480GB SSDs for OS / boot. Worker Node is used as "SPEC Prime". |

| 4 | 1 | Switch | Mellanox | MSN2700-CS2R | Mellanox Switch MSN2700-CS2R with 32 port 100Gbit, client ports split 100Gbit port into 2x 50Gbit. For storage communication via RDMA between the clients and the storage nodes. Priority Flow-Control (PFC) configured for RoCEv2. |

| 5 | 12 | Cable | Mellanox | MCP1600-C005E26L-BL | Mellanox MCP1600-C005E26L-BL QSFP28 to QSFP28 connection. 100Gbit switch port to 100Gbit connector for ELEMENTS BOLT (storage node) connectivity. |

| 6 | 11 | Cable | Mellanox | MCP7H00-G004R26L | Mellanox MCP7H00-G004R26L QSFP28 to 2x QSFP28 breakout cable to fan out 100Gbit switch port to 2x 50Gbit connectors to 20x ELEMENTS Gateway (load generator) and to 1x Prime Client Node. |

| 7 | 1 | Switch | Arista | DCS-7050T-64-F | Arista Switch DCS-7050T-64-F with 48 port 10Gbit ports, used as lab switch for administrative access. No special configuration applied, not part of the SUT. |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Storage Node | Operating System | ELEMENTS Linux 7.5 | Operating System on storage nodes. CentOS 7.5 based (Linux Kernel 3.10.0-862.14.4) with Mellanox OFED 4.9 |

| 2 | Storage Node | Filesystem | BeeGFS 7.2.3 | Filesystem on storage nodes. BeeGFS 7.2.3 storage, metadata and management daemons. |

| 3 | Client Node | Operating System | ELEMENTS Linux 7.7 | Operating System on client nodes. CentOS 7.7 (Linux Kernel 3.10.0-1062) based with Mellanox OFED 4.9 |

| 4 | Client Node | Filesystem Client | BeeGFS 7.2.3 | Filesystem client on ELEMENTS GATEWAY (client nodes). BeeGFS 7.2.3 client daemon. |

| 5 | Worker Node | Operating System | ELEMENTS Linux 7.7 | Operating System on worker node (SPEC prime). CentOS 7.7 based with Linux 5.3 kernel. |

| ELEMENTS BOLT | Parameter Name | Value | Description |

|---|---|---|

| C-State Power Management | C0 | Switch CPU C-State Management to use state C0 only | ELEMENTS GATEWAY | Parameter Name | Value | Description |

| C-State Power Management | C0 | Switch CPU C-State Management to use state C0 only |

| Jumbo Frames | 9000 | Use jumbo ethernet frames for optimised throughput and CPU utilization |

| Priority Flow-Control | enabled | Configure Priority Flow-Control / QOS VLAN tagging on all nodes to manage RoCEv2 RDMA traffic flow |

None

| ELEMENTS BOLT | Parameter Name | Value | Description |

|---|---|---|

| Jumbo Frames | 9000 | Use jumbo ethernet frames for optimised throughput and CPU utilization |

| Priority Flow-Control | enabled | Configure Priority Flow-Control / QOS VLAN tagging on all nodes to manage RoCEv2 RDMA traffic flow |

| BeeGFS storage worker threads | 24 | Raise the storage daemon worker threads from 12 to 24 |

| BeeGFS metadata worker threads | 24 | Raise the metadata daemon worker threads from 12 to 24 | ELEMENTS GATEWAY | Parameter Name | Value | Description |

| Jumbo Frames | 9000 | Use jumbo ethernet frames for optimised throughput and CPU utilization |

| Priority Flow-Control | enabled | Configure Priority Flow-Control / QOS VLAN tagging on all nodes to manage RoCEv2 RDMA traffic flow. See Mellanox configuration guides for details. |

BeeGFS client default mount options. Each node has Priority Flow-Control enabled for lossless RDMA backend communication according to Mellanox configuration guides. Aside this, the SOMAXCONN kernel parameter has been raised according to SPEC tuning guidelines to sustain the high amount of TCP socket connections from the SPEC prime client node. This is independent of the SUT.

None

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | Micron 9300 3.84TB NVMe SSD, 12 per storage node. | RAID5 (11+1) | Yes | 144 |

| Number of Filesystems | 1 | Total Capacity | 472068GiB | Filesystem Type | BeeGFS 7.2.3 |

|---|

One filesystem across all 12 nodes,striping chunksize 8MB, 4 storage targets per file.

Each node had one RAID5 (11+1, RAID 16kb stripe size) on 12 NVMEs and exported one LUN. All ELEMENTS BOLT were half populated only.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 100GbE | 12 | Storage nodes were using 100Gbit link speed |

| 2 | 50GbE | 20 | Client nodes were only using 50Gbit link speed due to port split on switch |

| 3 | 50GbE | 1 | Prime Client node was connected to the storage network using a single 50Gbit link |

| 4 | 1GbE | 32 | All storage and client nodes were connected to a 1Gbit house network for administrative and management access. |

Actual link speeds of client nodes were 50Gbit only due to port split config on Mellanox switch.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | Mellanox | MSN2700-CS2R | 32 | 32 | 100Gbit ports attached to clients operating in 50Gbit split port mode (2x50Gbit port per physical 100Gbit port). Priority Flow-Control enabled. |

| 2 | Arista | DCS-7050T-64-F | 48 | 48 | Arista Switch DCS-7050T-64-F with 48 port 10Gbit ports, used as lab switch for administrative access. No special configuration applied, not part of the SUT. |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 24 | CPU | Processing Element Location | Intel XEON Gold 5222 4-core CPU 3.8GHz | Storage Nodes |

| 2 | 20 | CPU | Processing Element Location | Intel XEON Silver 4215R 8-core CPU 3.2GHz | Client Nodes |

| 3 | 1 | CPU | Processing Element Location | AMD Threadripper PRO 3955WX 16-core 3.9GHz | Prime Client Node |

None

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| ELEMENTS BOLT (storage) memory | 192 | 12 | V | |

| ELEMENTS Gateway (client) memory | 32 | 20 | V | |

| Prime memory | 32 | 1 | V | Grand Total Memory Gibibytes | 2976 |

The storage nodes have a total of 2304GiB memory, the client nodes have a total of 640GiB and the prime client node has a total of 32GiB RAM. The SUT has a total memory of 2944GiB.

The ELEMENTS BOLT does not use write cache to store data in flight, writes are immediately committed to the NVMe storage media. FSYNC has been enforced on the whole storage stack (Default BeeGFS filesystem setting), all involved components use redundant power supplies and RAID1 in Write Through mode for operating system disks.

The tested storage configuration is a common solution based on standard ELEMENTS hardware, designed for highest performance media production workflows. This includes ingesting and streaming media in various formats to video production workstations, but also high throughput frame-based and VFX workflows. All components used to perform the test were patched with Spectre and Meltdown patches (CVE-2017-5754, CVE-2017-5753, CVE-2017-5715).

None

All storage and clients nodes are connected to the listed Mellanox switch. The storage is accessed from the client nodes via RDMA (RoCEv2) using the native BeeGFS client. The network layer has been configured to use Priority Flow-Control to manage the data flow as per Mellanox configuration guidelines for RoCEv2.

None

None

Generated on Tue Aug 24 07:10:13 2021 by SpecReport

Copyright © 2016-2021 Standard Performance Evaluation Corporation