SPECstorage™ Solution 2020_ai_image Result

Copyright © 2016-2022 Standard Performance Evaluation Corporation

|

SPECstorage™ Solution 2020_ai_image ResultCopyright © 2016-2022 Standard Performance Evaluation Corporation |

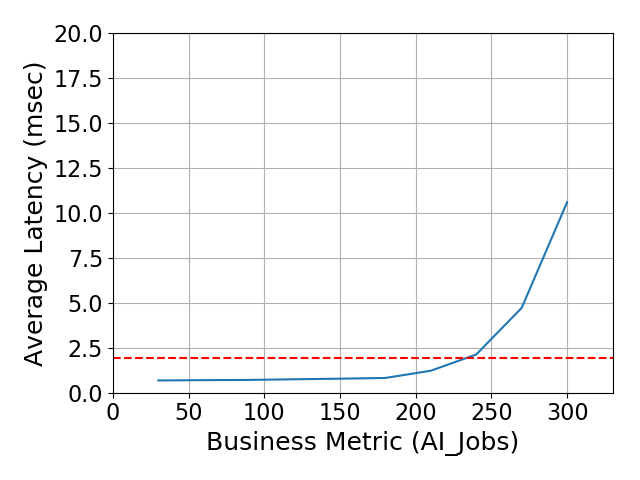

| Nutanix | SPECstorage Solution 2020_ai_image = 300 AI_Jobs |

|---|---|

| Nutanix Files | Overall Response Time = 1.94 msec |

|

|

| Nutanix Files | |

|---|---|

| Tested by | Nutanix | Hardware Available | 08 2022 | Software Available | 08 2022 | Date Tested | 07 2022 | License Number | 4539 | Licensee Locations | Durham, NC |

Nutanix Files is a highly available, scale-out file server solution. Deployed on Nutanix cloud platform it can be co-deployed with client and application servers or as a Dedicated NAS solution. Nutanix Files provides enterprise array performance while being software defined. It can scale from 4 to 24 vcpus and from 12 GB to 512GB of memory per fsvm (fileserver vm). The cluster can start with 3 fsvms and scale to 16 fsvms. In addition to high performance and low latency it provides NFS, SMB and multiprotocol access. Backup and Disaster recovery are easy to acheive with native replication or with partner backup solutions. Nutanix Files is enhanced by Nutanix Data Lens. Data Lens is designed specifically to provide unique insights into unstructured data environments, as well as insight into data movement, access, and audit logging. Data Lens also provides the ability to set up industry-leading ransomware protection for data.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 4 | Node | Super Micro Computer | NX-8170-G8 | The high performance Nutanix 8170-G8 node with "Ice Lake" Intel(R) Xeon(R) Gold 6336Y CPU @ 2.40GHz is a powerful cloud platform. Each node has 1024 GIB of memory and 1 Mellanox CX-6 100 GbE dual port network card and an embedded 1 GbE Ethernet card for IPMI. Each node has dual 512GB Marvell boot devices. For data storage each node has [2] 1.5 TB ultra low latency Intel Optane devices and [8] 2 TB NVMe storage devices. |

| 2 | 2 | Node | Super Micro Computer | NX-8170-G8 | The high performance Nutanix 8170-G8 node with "Ice Lake" Intel(R) Xeon(R) Gold 5315Y CPU @ 3.20GHz is a powerful cloud platform. Each node has 512 GIB of memory and 1 Mellanox CX-6 100 GbE dual port network card and an embedded 1 GbE Ethernet card for IPMI. Each node has dual 512GB Marvell boot devices. For data storage each node has [2] .75 TB ultra low latency Intel Optane devices and [8] 2 TB NVMe storage devices. |

| 3 | 1 | Switch | Arista | Arista DCX-7160-32CQ-R | The Arista DCX 7160 offers 32 ports of 100 GbE in a compact 1U form. It can be used as leaf or spine and provides migration capabilities from 10 GbE to 25 GbE. It can provide maximum system throughput of 6.4 Tbps and supports latency as low as 2 usec. |

| 4 | 1 | Switch | Dell | Dell PowerConnect 5548 | The Dell PowerConnect 5548 offers 48 ports of 10/100/1000 and 2 x 10 GbE uplink ports. |

| 5 | 6 | Ethernet Card | NVIDIA (Mellanox) | MCX623106AS-CDAT | The Mellanox ConnectX-6 Dx is a 2 port 100 GbE card. Connectors are QSFP56Gen4x16 with PCIe 4.0 x16 host interface and a link rate of 16.0 GT/s. |

| 6 | 4 | Disk | Intel | P5800X SSDPF21Q800GB | The 800 GB Intel Optane SSD offers increased performance over NAND SSDs. This 2.5 in PCIe x4, 3D Xpoint device provides a low latency read tier that with response times below 3 us. |

| 7 | 8 | Disk | Intel | P5800X SSDPF21Q016TB | The 1.6 TB Intel Optane SSD offers increased performance over NAND SSDs. This 2.5 in PCIe x4, 3D Xpoint device provides a low latency read tier that with response times below 3 us. |

| 8 | 44 | Disk | Samsung | PM9A3 MZQL21T9HCJR-00A07 | The 1.92 TB Samsung NVMe drive leverages PCIe Gen 4 x4 lanes to offer up to 6800 MB/s sequential read performance. |

| 9 | 4 | Disk | Intel | P4510 SSDPE2KX020T8 | The 2.0 TB Intel TLC 3d NAND device offers data center resilience under heavy load. It leverages PCIe 3.1 x 4 lanes to offer sequential read bandwidth up to 3200 MB/s and sequential write bandwidth up to 2000 MB/s. |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Acropolis Hypervisor (AHV) | Hypervisor | 5.10.117-2.el7.nutanix.20220304.227.x86_64 | The 6 Super Micro servers were installed with Acropolis Hypervisor (AHV). AHV is the native Nutanix hypervisor and is based on the CentOS KVM foundation. It extends its base functionality to include features like HA, live migration, IP address management, etc. |

| 2 | Acropolis Operating System (AOS) | Storage | 6.5 | There were 6 Controller Virtual Machines (CVMs) deployed as a cluster that run the Acropolis Operating System (AOS). AOS is the core software stack that provides the abstraction layer between the hypervisor (running on-premise or in the cloud) and the workloads running. It provides functionality such as storage services, security, backup and disaster recovery, and much more. A key design component of AOS is the Distributed Storage Fabric (DSF). The DSF appears to the hypervisor like any centralized storage array, however all of the I/Os are handled locally to provide the highest performance. |

| 3 | Nutanix Files | File Server | 4.1.0.3 | There were 4 Nutanix Files VMs (FSVMs) deployed as a Files cluster. Nutanix Files is a software-defined, scale-out file storage solution that provides a repository for unstructured data. Files is a fully integrated, core component of the Nutanix cloud platform that supports clients and servers connecting over SMB and NFS protocols. Nutanix Files offers native high availability and uses the Nutanix distributed storage fabric for intracluster data resiliency and intercluster asynchronous disaster recovery. |

| 4 | openZFS | filesystem | 0.7.1 | The minervafs filesystem customized by Nutanix sits on top of the open zfs filesystem. |

| 5 | Load Generators | Linux | CentOS 8.4 64 bit | 13 Linux guest clients total, 1 orchestration client and 12 load generating clients deployed as virtual machines. |

| Node | Parameter Name | Value | Description |

|---|---|---|

| Port Speed | 100GbE | Each node has 2 x 100 GbE ports connected to the switch |

| MTU | 1500 | Default value of the OS |

Please reference diagram called Nutanix Private Cloud for hardware view. The System Under Test used 2 100 GbE ports per node with MTU set to 1500. No custom tuning was done. Tests were run on default configuration as shipped from manufacturer.

| Centos 8.4 Linux Clients | Parameter Name | Value | Description |

|---|---|---|

| vers | 3 | NFS mount option set to 3 |

| rsize,wsize | 1048576 | NFS mount option set to 1 MB |

| nconnect | 4 | Increases the number of NFS client connections from the default of 1 to 4 | Nutanix Files FSVMs | Parameter Name | Value | Description |

| Filesystem buffer cache | 90 | Allows Filesystem buffer cache to grow to a max of 90 percent of system memory | AHV | Parameter Name | Value | Description |

| Acropolis Dynamic Scheduling (ADS) | Disable | Disables automatic load balancing of VMs by hypervisor |

The CentOS 8.4 clients mounted the shares over NFSv3 with standard mount rsize and wsize of 1048576. The filesystem buffer cache was allowed to grow to 90 percent of system memory.

None

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | Nutanix NX-8170-G8 node with [2] 1.5 TB ultra low latency Intel Optane devices and [8] 2 TB NVMe storage devices. All devices from all nodes are combined into a shared Distributed Storage Fabric (DSF) | Replication Factor 2 (RF2) | Yes | 4 |

| 2 | Nutanix NX-8170-G8 node with [2] .75 TB ultra low latency Intel Optane devices and [8] 2 TB NVMe storage devices. All devices from all nodes are combined into a shared Distributed Storage Fabric (DSF) | Replication Factor 2 (RF2) | Yes | 2 |

| Number of Filesystems | 12 | Total Capacity | 43.67 TiB | Filesystem Type | blockstore->iSCSI->minervafs->NFSv3 |

|---|

Nutanix Files deploys like an application on top of the Nutanix Cloud Platform. Upon share creation, the data storage layers as described in the diagram "Data Storage Layers" are deployed. The minervafs filesystems resides on volumes (volume group). The volume groups are composed of iSCSI LUNs that are vdisks provided by the Nutanix AOS Distributed Storage Fabric. All fsvms attach to vdisks that are load balanced across the resources of the cluster so that there are no single points of failure or single resource bottlenecks. There are no complex iSCSI SAN configuration steps required. The distributed storage fabric takes all of the physical disks and creates a single physical storage pool. The storage pool creates two copies of the data so that if there are any disruptions to the first copy then the data remains available from the second copy of the data. This is called RF2 or replication factor 2 in Nutanix terminology. This is equivalent in RAID terminology to the protection of RAID10. However the dataset copies are somewhat more sophisticated than classic RAID10 where the entire physical disk is mirrored on another physical disk. Instead, with RF2, The data is mirrored at an extent level ( 1 to 4 MB) across different nodes and physical disks while ensuring two total copies of the data exist. Specifically in this environment the total physical capacity is 87.33 TiB. -->

All filesystems are created with the default blocksize of 64KB. 12 shares were used thus residing on 12 minervafs filesystems distributed across the hosting fsvms and underlying storage. Each minervafs filesystem sits on top of a zfs filesystem(zpool) and is composed of a data disks, metadata disks, and an intent log for small block random write commits.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 100 GbE Ethernet | 12 | Each Nutanix node has [2] 100 GbE ports configured active active in a port channel bond with LACP load balancing. |

| 2 | 1 GbE Ethernet | 6 | Each Nutanix node has [1] 1 GbE port configured for ipmi access for node hardware management. |

Each Nutanix node uses 2 x 100 GbE ports for a total of 12 x 100 GbE ports. In the event of a host failure, the hosted VMs with the exception of the CVM will live migrate to another host. The physical ports are connected into a virtual switch (vswitch) and configured Active-Active in an LACP bond. The 100 GbE ports are set to MTU 1500. There is 1 x 1 GbE port per host used for management and no data traffic goes through this interface for the System Under Test.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | Arista DCS-7160-32CQ-R | 100 GbE | 32 | 12 | We used the default settings that cannot be changed for Arista that are 9214 MTU. We used the default host nic settings for 1518 MTU. The Arist passes along host traffic at the size of 1518 therefore there are no jumbo frames used for host and vm traffic. Ports for each node configured in an etherchannel bond. |

| 2 | Dell PowerConnect 5548 | 10/100/1000 | 48 | 6 | All ports set to 1518 MTU. This switch is used for the management and configuration network only. |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 8 | CPU | Node | Intel(R) Xeon(R) Gold 6336Y CPU @ 2.40GHz | Each hardware node has 2 CPUs, each with 24 cores. These shared cpus process all client, server, network, and storage resources. |

| 2 | 4 | CPU | Node | Intel(R) Xeon(R) Gold 5315Y CPU @ 3.20GHz | Each hardware node has 2 CPUs, each with 8 cores. These shared cpus process all client, server, network, and storage resources. |

The physical cores are leveraged by the virtual machines as vcpus deployed on the private cloud platform. In this test configuration the [6] cvms had 16 vcpus pinned to one NUMA node, the [4] fsvms had 16 vcpus pinned to the other NUMA node. The 12 Linux load generating client VMs each had 8 vcpus and the orchestration client had 4 vcpus.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| 4 NX-8170-G8 nodes with 1024 GB of memory. | 1024 | 4 | V | 4096 |

| 2 NX-8170-G8 nodes with 512 GB of memory. | 512 | 2 | V | 1024 | Grand Total Memory Gibibytes | 5120 |

The physical memory is leveraged by the virtual machines deployed on the private cloud platform. In this test configuration each of the [6] cvms had 64 GB and each of the [4]fsvms had 384GB. The 12 Linux load generating client VMs each had 192 GB and the orchestration client had 4 GB. The fsvm uses a large portion of memory for the Filesystem buffer cache. We set it to 90% of system memory.

Nutanix Files commits all synchronous NFS writes to stable permanent storage. The data is not cached in a battery backed RAM device before it is committed. The systems are protected by redundant power supplies and highly available clustering software both at the Files level and the AOS level. All committed writes are protected by the Nutanix DSF and protected via Replication Factor (RF2). This means two copies of data exist. In the event of a power failure any write in transit will not be acknowledged. If there are cascading power failures or powered down for multiple days, upon boot of the nodes the cluster restarts for DSF and for Files and the filesystems recover automatically.

There were 13 total Linux client VMs driving the Specstorage2020 AI_IMAGE workload. The orchestration client did not run the workload but directed the 12 clients to run the workload. Each was configured with 1 a single virtual network interface. Each fsvm has a single interface for incoming client NFS traffic. The spectre/meltdown mitigations are as follows: Nutanix File Server Storage VM Linux 4.14.243-1.nutanix.20220309.el7.x86_64 kernel set to mitigations=auto Critical Note: Nutanix service VMs are treated as a “black box” configuration and restricted from running untrusted code. Future versions of this platform will reevaulate mitigation configuration in favor of a more optimal configuration, as mitigations=auto results in a slightly over-mitigated configuration, resulting in higher than desired guest kernel overhead. Future modifications should result in better performance. Nutanix Backend Storage Controller VM Linux 3.10.0-1160.66.1.el7.nutanix.20220623.cvm.x86_64 kernel set to mitigations=off Critical Note: Nutanix service VMs are treated as a “black box” configuration and restricted from running untrusted code. Future versions of this platform will reevaulate mitigation configuration in favor of a more optimal configuration, as mitigations=off requires the hypervisor to switch in and out of Enhanced IBRS on every vm-exit and vm-entry, resulting in higher than desired hypervisor kernel overhead. Future modifications should result in better performance. Nutanix Acropolis Hypervisor Linux 5.10.117-2.el7.nutanix.20220304.227.x86_64 kernel set to mitigations=auto Critical Note: Nutanix Hypervisor is treated as a black box” configuration and restricted from running untrusted code. Future versions of this platform will enhance vm-exit overhead for guest VM's that have Enhanced IBRS enabled, as MSR filtering for SPEC_CTRL is currently configured resulting in an expensive read msr on every vm-exit, resulting in higher than desired hypervisor kernel overhead. Future versions will optimize this filtering, which should result in better performance.

None

Please reference diagrams entitled Networks and Data Storage Layers. Each of the 12 load generating clients mounted a seprate share over NFSv3 with nconnect set to 4. The shares were evenly distributed across the 4 fsvms composing the Nutanix Files (fileserver).

The dataset was created by an initial test and left in place. Then the clients re-tested against the dataset with 10 equally spaced load points. All default background services and space reduction technologies were enabled.

Nutanix, AOS, and AHV are registered trademarks of the Nutanix Corporation in the U.S. and/or other countries. Intel and Xeon are registered trademarks of the Intel Corporation in the U.S. and/or other countries.

Generated on Tue Aug 16 14:39:50 2022 by SpecReport

Copyright © 2016-2022 Standard Performance Evaluation Corporation